The Surge in Generative AI

The surge of Generative AI (GenAI) has triggered the most radical transformation in data center design since the dawn of the internet. We are moving away from the era of "General Purpose Compute"—where servers handled varied, smaller tasks- into the era of the "AI Factory." In this new paradigm, AI isn't just increasing demand for capacity; it is fundamentally altering the physics of the facility. The design focus has shifted from simple real estate ("white space") to a complex triad of ultra-high power density, precision thermal management, and lossless network fabrics.

The surge of Generative AI (GenAI) has triggered the most radical transformation in data center design since the dawn of the internet.

The Density Explosion

1. Power Density: The Move Toward the 130kW Rack

In a traditional enterprise data center, power density was predictable. A standard rack consumed between 5kW and 10kW, allowing for simple air-based cooling and standard electrical distribution. However, the arrival of high-performance GPU clusters - essential for the "brute force" training of Large Language Models (LLMs) - has shattered those standards.

Modern AI server blocks, such as those housing NVIDIA H100 or Blackwell GPUs, are pushing typical rack densities into the 30kW to 72kW range.

- The Engineering Challenge: Distributing this much power requires a total redesign of the electrical chain. Operators are moving toward 415V or 480V distribution directly to the rack to reduce transmission losses and eliminate bulky transformers.

- The 130kW Horizon: Next-generation AI clusters are already being engineered for sustained loads of 100kW to 130kW per rack. At this level, even a minor electrical inefficiency results in massive heat spikes, making power quality and "clean" delivery a prerequisite for preventing hardware failure.

The Physics of Liquid

2. Thermal Management: Why Liquid Cooling is Mandatory

We are currently witnessing the death of air cooling for high-performance workloads. Air is a natural insulator; it lacks the thermal mass to absorb the intense heat flux generated by AI accelerators. Air cooling systems typically peak at around 30kW to 50kW per rack; beyond that, the volume of air required to cool the chips would move at hurricane-force speeds, creating noise and vibration issues that damage the hardware.

Liquid cooling is roughly 3,000 times more effective than air at absorbing and transporting heat.

- Direct-to-Chip (D2C): This is becoming the industry gold standard. Cold plates are mounted directly onto the GPUs, carrying coolant to the heart of the heat source. This allows for higher chip temperatures and better performance without "thermal throttling" (where the chip slows down to prevent melting).

- Power Usage Effectiveness (PUE) Gains: By removing the need for massive, energy-hungry chiller plants and computer room fans, liquid cooling allows data centers to reach a PUE of 1.1 or lower. This means for every 100W used, only 10W is wasted on cooling, compared to 40W+ in older air-cooled facilities.

The Need for Lossless Speed

3. Networking: The Rise of the Lossless Fabric

Training a model like GPT-4 requires thousands of GPUs to work in perfect synchronization. If one GPU has to wait for data from another because of network congestion, the entire multi-billion dollar cluster sits idle. This is known as the "Tail Latency" problem.

- High-Radix Topologies: AI demands a shift from standard "Leaf-Spine" networks to specialized "Fat-Tree" or "Torus" topologies. These designs ensure that every GPU has an equal, low-latency path to every other GPU.

- Specialized Protocols: Traditional Ethernet is "lossy"- it drops packets when congested and asks for them to be resent, which is unacceptable for AI. This has driven the adoption of InfiniBand or RoCE (RDMA over Converged Ethernet). These protocols allow for "Remote Direct Memory Access," letting one server read the memory of another without involving the CPU, slashing latency to the microsecond level.

- 800G Optics: To keep up with GPU throughput, data centers are rapidly upgrading to 400G and 800G optical interconnects, using fiber optics to bridge the gap between racks at the speed of light.

The Numbers Behind the Strain

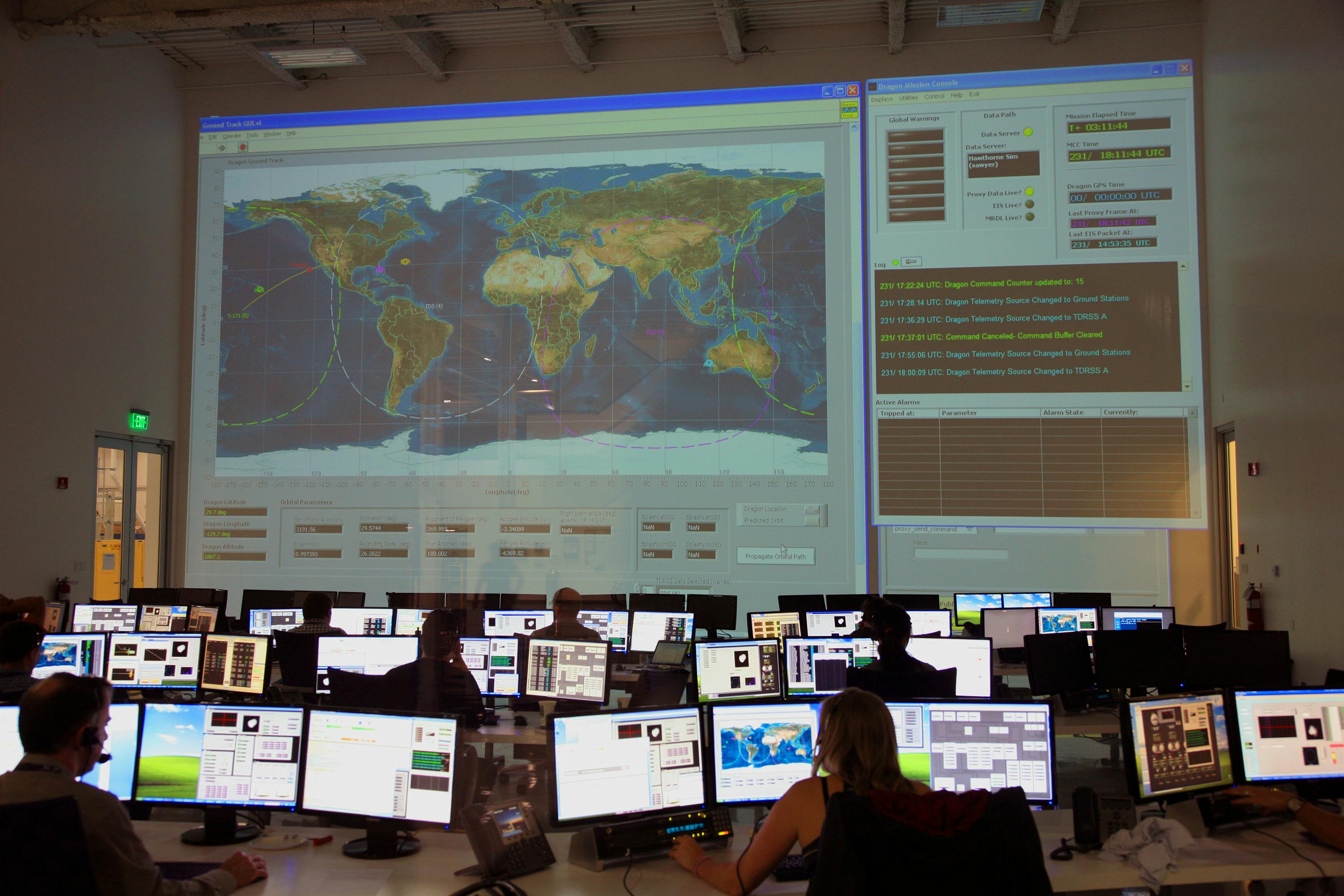

4. The Macro-Challenge: A Global Grid in Crisis

AI’s computational intensity is straining global power grids to their breaking point. In major hubs like Northern Virginia or Dublin, utility providers are telling data center developers they may have to wait 5 to 7 years for a new grid connection.

- Growth Projections: Global data center power demand is projected to increase by 160% to 200% by 2030

- The AI Share: In 2023, AI workloads accounted for roughly 14% of total data center power. By 2027, that share is expected to nearly double to 27%.

- The "Power-First" Strategy: This scarcity has made power, not land, the primary asset. Developers are now building in "non-traditional" locations simply because those areas have available megawatts, leading to a geographical decentralization of the internet infrastructure.

Conclusion: The Integrated AI Factory

The AI Data Center is no longer just a building full of servers; it is a highly tuned AI Factory. In this environment, the electrical system, the cooling loop, and the network fabric are not independent systems - they are a single, integrated organism.

The AI Data Center is no longer just a building full of servers

Further Reading

Related insights

Expert analysis, market trends, and professional guidance from the world's leading data center specialists.

Explore All Further Reading

Access comprehensive expert analysis, real-time market intelligence, actionable career guidance, and in-depth technical insights from DC Forté's global community of data center specialists, industry thought leaders, and Advisory Board members. New insights published weekly across careers, technology, market trends, and sustainability topics.

Ready to Lead, Not Follow?

Join the professionals who read it on Forté IQ first.

Trusted by 150,000+ data center professionals worldwide